In the paper Are Large Language Models a Threat to Digital Public Goods? Evidence from Activity on Stack Overflow researchers look at the dwindling traffic and activity on SO — which announced their own generative code model to save itself —, which means a downward spiral for AI-development as less and less human data is created the more people use AI-products for coding. That is interesting in itself, but i'd like to drive home another point about why LLMs are a threat to digital public goods that not many people are talking about.

Now, renowned tech-critic Evgeny Morozov posted a few tweets about "socialist AI" recently. On a sidenote: I watched the Berlin wall fall down and I, to say it bluntly, don't like socialism, as it has been proven to not work over and over again. But i also think that capitalism works best with some socialism sprinkled all over it, with strong and plenty of social services for those who need them, including libraries.

In my piece On Stochastic Libraries, i already made the case that because foundation models are trained on public data, they should be run similar to public libraries: By the public sector, for the people, free to use and "to ensure safety, ethical production and prevention of abuse. Running foundational models by the public would also ensure data transparency and work against the 'black boxing' of this tech."

To me, a social democrat who likes capitalism, multibilliondollar companies sucking up public data including coding expertise on Stack Overflow and the creative work of millions of people, without compensation or consent, and building commercial products on top of that data for which other people then pay 300 bucks per month, just sounds unfair.

At the very least, AI-tech means we have to get serious about a universal basic income. This looks like the mother of digital extractivism to me, and, as they say, we the people should confront that. Thankfully, many do.Related: The coming issue of Time Magazine is aptly titled "AI by the people, for the people", and its main story is about an Indian Startup Making AI Fairer — While Helping the Poor: "Like its competitors, it sells data to big tech companies and other clients at the market rate. But instead of keeping much of that cash as profit, it covers its costs and funnels the rest toward the rural poor in India. (...) In addition to its $5 hourly minimum, Karya gives workers de-facto ownership of the data they create on the job, so whenever it is resold, the workers receive the proceeds on top of their past wages."

In OpenAI will crush Sarah Silverman Evan Zimmerman makes the case that copyright cases against AI-companies will fail because machine learning is transformative use.

He's not wrong, but he fails to mention that fair use in the US protects research from copyright claims, and that those LLMs and image synthesis algorithms are commercial products by now which do share the same market as the complaining authors, both of which is highly relevant in fair use cases.

And from an ethical standpoint: Sure, maybe we want "machines to be able to learn from the world, and creative works are part of it", but these machines are not the same as the art student going to a museum drawing stuff based on existing art, these machines are algorithms developed by multibilliondollar-companies using unlicensed data. This is fine for research, but not for commercial products.

All of this seems to fly over the head of Zimmerman and I think his arguments are deeply flawed, even when he thinks that "there will ultimately be some commercial arrangement that enables creators to contribute to the AI revolution and get paid". This, after all, is what these lawsuits are about: Fair compensation for the nonconsensual contribution to a commercial product.Related articles at BBC: New AI systems collide with copyright law, Guardian: Authors call for AI companies to stop using their work without consent, WSJ: Outcry Against AI Companies Grows Over Who Controls Internet’s Content

In If Play is a Sign of Intelligence, AI is Unintelligent, Zohar Atkins makes the case that none of all those news about AI successing at this or that educational tests say anything about the actual intelligence of these systems and i agree.

AI is just a stochastic library cobbling together interpolations on it's database of a trillion weights, based on your input, and it doesn't have any idea about the content of the stuff it is creating.

In Atkins view, no AI is platonic, it has no clue about a world of ideas: If you show an AI an image and a painting of an apple, it will identify an apple. But both are not, in a platonic view, apples. They are images. An AI has no clue about images or it’s output and ultimately, because AI can't suspend disbelieve in playful engagement with it's input, like humans do when they play, this is a clear sign of these systems being not intelligent at all.

I think he's right about all of that.At the Edinburgh Fringe Festival, today there will be an AI-assisted show with a robot on stage throwing AI-generated bits and performers improvising upon them. Here's a short BBC-clip of that performance. The intersection of humor and absurdity and AI is highly relevant, as playfulness and the recognition of paradoxical stuff to me are clear signs of true intelligence. And yes, the great Craig Ferguson had a punk skeletton robot in his show, but it was manually operated and didn't use the cat cannon himself. (While we're here: I just found an old Craigyferg supercut i did back in 2015 when he retired. He's still the best, by a very long shot..)

Related: Do androids laugh at electric sheep? Study challenges AI models to recognize humor.

Humans are still chief tricksters, but the robots are coming: "Using hundreds of entries from the New Yorker magazine's Cartoon Caption Contest as a testbed, researchers challenged AI models and humans with three tasks: matching a joke to a cartoon; identifying a winning caption; and explaining why a winning caption is funny. In all tasks, humans performed demonstrably better than machines, even as AI advances such as ChatGPT have closed the performance gap."A new paper Accelerating science with human-aware artificial intelligence shows that "by tuning human-aware AI to avoid the crowd, we can generate scientifically promising ‘alien’ hypotheses unlikely to be imagined or pursued without intervention until the distant future, which hold promise to punctuate scientific advance beyond questions currently pursued. By accelerating human discovery or probing its blind spots, human-aware AI enables us to move towards and beyond the contemporary scientific frontier."

LLMs are stochastic libraries containing vast interpolative latent spaces, in which you can fuse data points to create amalgams of existing stuff. With ChatGPT, you already can fuse Postmodernism with, say, Mathematics and get out plausible results in the form of theories that may even make sense to us.

I also think that synthetic theory will become a mainstay of academia in the decades to come, with especially the natural sciences making leaps forward by AI predictions, possibly to be completely taken over by AI in the long run. I wouldn't be surprised when we see AI coming up with a working theory of quantumgravity within a decade from now.I published a piece about the fundamental limitations of AI alignment recently, and now a new paper about Fundamental Limitations of Reinforcement Learning from Human Feedback (RLHF) — tech-jargon for finetuning aka the mask that hides the Shoggoth — is concluding that "many problems with RLHF are fundamental" and some "problems with RLHF cannot fully be solved and instead must be avoided or compensated for with nonRLHF approaches".

This means that non of the modern AI-tools are safe, period.Overreaching surveillance tech meets precrime: This AI Watches Millions Of Cars And Tells Cops If You’re Driving Like A Criminal

The hidden cost of the AI boom: Social and environmental exploitation

I usually don't link to list-style articles, but this one is good: What Self-Driving Cars Tell Us About AI Risks.

Please note, that when "Human errors in operation get replaced by human errors in coding" there is a shift in responsibility too, which in combination with real time trading of public companies like Tesla is an incentive to hide evidence of such coding errors, as proven by the recent scandal involving the supression of complaints: Tesla’s secret team to suppress thousands of driving range complaints.

If i drive a car and hit a wall, it's my fault. If an algorithm drives my car and hits a wall, it is (most likely) the car's fault. And companies surpressing information about that is both a deep red flag for safety and trade ethics.

This is also true for LLMs in all kinds of applications like generative code or the highly questionable use of AI in the health sector: If a doctor makes a wrong decision and you die, he's responsible and can be held accountable. If an algorithm makes that decision, it's the company which produced the algorithm that is responsible, and i bet a buck that we will see a big fat scandal involving such a scenario in which an AI-service surpresses information about bad health advice which lead to the death of a patient. Mark my words.While we're at pharmabros: The future is pharmabros AI-ing new drugs from neanderthal-data: AI search of Neanderthal proteins resurrects ‘extinct’ antibiotics.

Anthropic, Google, Microsoft, and OpenAI formed a coalition to research AI safety. I usually ignore such industry newsbits as they mostly are a lot of hot air and PR speech, but this made the rounds and some people are so naive and conclude that there is no AI-race.

This is just outsourcing the creation of industry standards to a new body while anticipating the impact of incoming regulation. Regulation will come down anyways, no matter if such bodies exist, at least in countries with a functioning administration, about which i have my doubts in neoliberal nations like the US, which is why I’m afraid this is relevant.Wired: This Disinformation Is Just for You: "Generative AI won't just flood the internet with more lies—it may also create convincing disinformation that’s targeted at groups or even individuals." — I'm not very worried about a flood of desinformation, as the bottleneck for desinfo is not production cost but human attention, and thus, i am much more worried about the targeting aspect here.

Redditors planted some fun desinformation in content farms using generative AI to produce "news": Redditors prank AI-powered news mill with “Glorbo” in World of Warcraft. This is fun until you realize that pretty much all publishers will have similar products in the coming years.

Generative Hacking is getting better: FraudGPT can write phishing emails and develop malware: "FraudGPT helps cybercriminals write phishing emails, develop cracking tools, and forge credit cards. It even helps find the best targets with the easiest victims." Here's another writeup: FraudGPT: The Villain Avatar of ChatGPT. -- You can also safely say that if researchers know about this, there are more sophisticated models available somewhere. If black hats value one thing more than anything, then it's secrecy.

Researchers implementied neural networks in DNA. This is way off if it ever leads to something real, but just imagine learning nanotech robots with biomolecular neural nets.

This is fine: Israel Using AI Systems to Plan Deadly Military Operations

This is also totally fine: The AI-Powered, Totally Autonomous Future of War Is Here: "Ships without crews. Self-directed drone swarms. How a US Navy task force is using off-the-shelf robotics and artificial intelligence to prepare for the next age of conflict."

'Oppenheimer' a warning to world on AI, says director Nolan — The problem with AI as an 'Oppenheimer Moment' is that we had a pretty clear idea of what nuclear power would do: Generate energy in such-and-such amounts, to build reactors and bombs. But we have no clear idea of the impact of AI, or where that impact will take place. Economically? Psychologically and cognitively? Politically? Spiritual? All of these and then some? No idea. AI might wither away economically for some reason, but have tremendous psychological impact, or the other way around. We have no idea and it’s impact is a known unknown.

In WavJourney: Compositional Audio Creation with Large Language Models, researchers present a model capable of generating whole radioplays on text prompt. The quality is so-so and the contents as surprising and original as you can imagine, but services like Audible gonna build a generative audiobook-section in the coming years for sure.

Haohe Liu previewed a coming generative music model showing off good results. And there's a new auto-captioner generating description for automatic music-data-pairs to create the large datasets necessary to make even better music models. The music landscape in ten years will look very different, and counter culture too.

Now i can clone your voice without finetuning a model: Dreaming Tulpa: "HierVST is zero-shot voice transfer system without any text transcripts. That means it is able to transfer the voice style of a target speaker to a source speaker without any training data from the target speaker."

Here's a fun music-to-image model which "sends an audio into LP-Music-Caps to generate a audio caption which is then translated to an illustrative image description with Llama2, and finally run through Stable Diffusion XL to generate an image from the audio".

Here’s the image results for Black Sabbaths Paranoid (which is a bit on the nose and doesn’t bite of the head off bats live on stage like Ozzy, but what can you do), Radioheads Creep (which seems weirdly apt) and David Bowies Heroes (which i can’t remember being covered by Bon Jovi but fine, AI, fine.)

Ready Or Not: "In this world, we don't wear masks to hide, but to reveal."

Someone Used Adobe's AI Tool To 'Fix' Katsuhiro Otomo's 'Akira'. Okay. Akira is my favorite anime and manga of all time (i'm not that into manga and anime, though i do have some obsessive days from time to time where i binge japanese animation), and you can't fix Otomos opus magnum, painstakingly created by 70 animators working nonstop to draw and animate every single detail of that movie. When it comes to animation, Akira is a class of it's own, simple as that.

As this clip evoked some strong reactions on Insta, he deleted it there, but left it up on the Youtubes.

Having said that: I do like those AI-Photoshop-autofill horizontalizations of movies, as it just gives you a fresh look at something familiar. Sure, IP holders are up in arms because of those user alterations of their sacred creations, but i'm also one of those guys who says that sacred cows need to be butchered. So here's horizontal Akira in all its glory:Subway Story by Roope Rainisto:

Who needs illustrators for storyboards: "Using the Generative AI Blender add-on with Stable Diffusion XL(+refinement step) to batch generate visual ideas for a screenplay I'm working on"

There is nothing more annoying that thread-bros on Twitter posting listicles of ten things that never blow my mind. Having said that, here’s a thread-bro’s listicles with ten AI-cinema-vids that mostly didn’t blow my mind, because they show off the kitsch and always-same-looking stuff likeminded people come up with. This is AI-Anti-Cinema. As such, it is amusing.

Art-mag Outland devotes a whole new issue to Image Synthesis, with my favorites being this piece about how "generative AI ushers in the ultimate freedom of the image from human agency", this discussion of prompt engineering as creative practice — i lean towards "no, ai image synth is not the creation of art, but exploration of a huge interpolative latent space" but still try to grasp what is happening — and this criticism of LLM-criticism from a poststructural perspective.

My piece on post-artificial text and the (not only) post-artificial irrelevance of the author after the AI-revolution is relevant for that last one.OpenAIs CEO Sam Altman recently posted this take on creativity: "everything 'creative' is a remix of things that happened in the past, plus epsilon and times the quality of the feedback loop and the number of iterations. people think they should maximize epsilon but the trick is to maximize the other two." Robin Williams would like to have a word.

Ollie Bown on the Tweeties: "I'm very happy to be able to announce that my book 'Beyond the Creative Species: Making machines that make art and music' (MIT Press, 2021) is now available as an open access ePub."

a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a a

The whole Twitter and Insta-feed of Frank Manzano is a sight to behold. This is what your brain feels like when you spend too much time on the web.

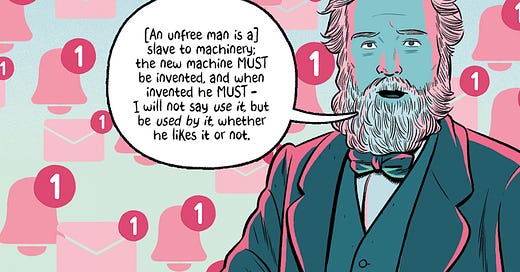

From the indie-webcomic publisher The Nib: I’m a Luddite (and So Can You!) by Tom Humberstone: "What the Luddites can teach us about resisting an automated future".

GOOD INTERNET ELSEWHERE // Twitter / Facebook / Instagram

SUPPORT // Patreon / Steady / Paypal / Spreadshirt / AI-Shirts

Musicvideos have their own Newsletter now: GOOD MUSIC. All killers and absolutely zero fillers. The latest issues featuring Slow Pulp, Truth Cult, Daughter, Enumclaw, Sløtface and many more. You can also find all the tracks from all Musicvideos in a Spotify-Playlist.

Subscribe to GOOD INTERNET on Substack or on Patreon or on Steady and feel free to leave a buck or two. If you don’t want to subscribe to anything but still want to send a pizza or two, you can paypal me.

Buy books on Amazon with this link and add me some pennies into my pockets like magic.

You can also buy Shirts and Stickers like a real person.

Thanks.

😶

Discussion about this post

No posts

Good.

Good idea.