As Neuralink gets human trial approval, lets talk about Machine Telepathy

How machine telepathy can work, and why we are absolutely not ready.

One of the first posts i wrote about machine telepathy was twelve years ago, when scientists extracted visual data from brains while people watched Youtube-clips and reconstruct those clips (link to my old blog in german) in rather crude early algorithmic videos which you can find on Youtube here.

Before, i already posted about simple neuro-toys which could do some simple tricks like the Star Wars Force trainer which came out in 2009 and read your brain activity via a dumbed down EEG-device. When you concentrated and increased brain activity, a ball in a glass tube started to levitate, when you relax, the ball sinks. Hence, you are a Jedi using the force. Ofcourse I was hooked every since, and followed the developments of BCI-tech rather closely.

Fast forward today: Neuralink, the Brain-Computer-Interface-company run by Elon Musk, just said that the U.S. Food and Drug Administration has approved the first clinical human trial for their BCI-device, after such trials previously being denied in 2022. I also want to explicitly mention that Musks Neuralink faces heavy criticism for harsh working conditions and is under investigation for animal cruelty for killing 1500 creatures since 2018 “including pigs, sheep and monkeys“.

Neuralink are not the first company to receive such approval: In July 2022 the a BCI-company called Synchron implanted the first device in a patient in the US, after already implanting their BCI in four patients in Australia, and there already exists a group called BCI pioneers, a coalition of people with disabilities who took part in early experimental BCI-procedures in a lab. It’s unclear to me how many people on earth are already living with Brain-Computer-Interfaces. ChatGPT estimates a few thousand, i would have expected that number to be much lower.

Neurotech going miles

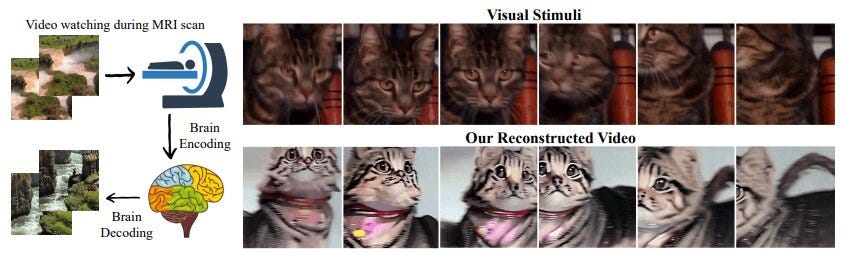

In the same month that Neuralink received approval for human trials, a new study presented an AI that was trained on fMRI-scans and was able to translate human thoughts into language; a paralyzed man was able to walk again thanks to an AI-supported interface that can translate signals from the brain to the spine; a Stable Diffusion-based AI-model was able to generate “high quality“-videos based on fMRI-data — here’s the demo-website for that last paper and what they deem “high quality“ is more like a rough, low fidelity estimate of what people actually see, but it’s impressive nevertheless. Here’s a Twitter-thread with more details and links.

More recent progress in brain-to-video extraction include the visualization of a dogs view with AI, Meta decoding speech from brain activity, a “high-performance speech neuroprosthesis“, in December 2022, Philip O’Keefe became the first person to post a tweet by thought (via a NYT-portrait of early BCI-pioneers), a paralyzed man who was able to ask for a beer, researchers were able to record electrical activity in one single neuron, we identified the music people were listening to by decoding brain waves, and many more.

Six years ago, in one of the most interesting studies in the field i know of, researchers were able to identify a brain region in monkey brains responsible for face recognition, and then visually reconstruct the faces those monkeys where seeing. They also speculated about stimulating those neurons to influence the face recognition of those monkeys. One year earlier researchers where able to not just extract the faces a human can see, but also hook into the human memory system and extract faces of people you know.

Still, keep in mind that progress in brain-to-video extraction took ten years from “generating similar video from brainwaves in very low quality“ to “generating similar video from brainwaves in good-ish quality with Stable Diffusion-synthesis“. All this stuff is impressive, but progress in the field is not as fast as it may seem, and I’m not entirely clear if Neuralink is as advanced in the field as their PR-speech suggests.

All those things ultimately rely on the the number of ultra-thin electrodes that go into your brain after opening your skull, or the resolution of the sensors that can be implanted into blood vessels into the brain, which is less invasive. Neuralinks resolution is “3072 electrodes per array distributed across 96 threads“ cited from their 2019 paper, but i’m not sure how far they progressed since then. Here’s a good overview of recent BCI-tech which also explains the controversies surrounding Neuralink in more detail.

All of which is to say: This shit is coming and is progressing slow but steady into a future where we will be able to read minds by using Artificial Intelligence as translating interfaces.

AI as Brain-to-Brain-converter

Back in February this year, psychologist Gary Lupyan wrote a highly interesting article in renowned Aeon magazine about why he doubts that machine telepathy will ever be achieved beyond certain basic BCI-functionalities.

His main argument: Brains are trained individually, and your brain region responsible for X reacts to a different stimulus than my brain region responsible for X. Here’s an example: In 2019 Stanford-researchers identified a “Pokemon“-region in our brains, that is: If you are heavily into collecting Pokemon-cards, a certain brain region and show patterns that encode funny japanese manga monsters. When you show a Pokémon-collector one of those monsters, neurons in that area light up and start to fire. The problem for developing brain-2-brain interfaces now is that if I am a stamp collector, the very same region in my brain will not contain Pokémon monsters, but stamps — and if you collect vinyl records, this same brain region will encode vinyl records, and so forth.

The trick for any brain-2-brain interface thus has to be to make those different encodings talk to each other: When you think about Pokémon, a b2b-interface not only has to identify the brain region, and the fact that you are thinking about your collection-subject of interest, but also what that interest actually is. A bad interface would simply tell me that Alice is thinking of Pokémon, when actually she is thinking of stamps.

In beforementioned Aeon-article, Lupyan also extensively talks about language as a protocol for the exchange of thoughts. But language also serves as a natural converter of brain activity: via language, i can not just tell you about the stuff i want or see or whatever, i can also modulate my use of language in such a way that influences your perception of those thoughts and make sure you get my point by expressing emotions. In brain-2-brain interface terms, this means that I can talk to you about collecting stuff, no matter what kind of thing you are collecting — language converts the enthusiasm of collecting Pokemon and stamps in a universal matter: “Hey Bob, how’s your stamp collection growing? I just came back from an anime convention and boy, i do have some freaking new monsters. Let’s have a beer.“ Language in this case serves as a converter of our shared neural enthusiasm for collecting stuff.

In my non-BCI-expert thinking, this should be kind of easily solvable with AI-models which are trained on your neural data and automatically labeled behavior, and building a converter to make two of those models talk to each other. When my AI-model catches a thought about Pokémon, your AI-model then automatically knows that it’s not a stamp, but an anime monster, because the converter not just sends over the neural data, but also a sort-of-header file encoding my unique neural profile. It’s very possible that future generations will share neural profiles as a sign of intimacy and trust, and I see no reason why this should be as impossible as Lupyan suggests.

The kicker: I have no idea why we should develop this form of machine telepathy in the first place. Sure, it’s super fascinating to even think about a direct brain-to-brain interface where we converse by exchanging neural data and brain stimulation, but it seems slow and unreliable even when we work this out, especially when we already do have this million years old b2b-interface called language. We nowadays even compete to stimulate other brains with that interface, which is why we have an outrage economy on the internet.

Also, BCI-Technology as of now requires at the very least injecting sensors into blood vessels that go in your brain, and most require to open your skull. I don’t think anyone will open their skull for technology that is has an evolutionary grown neurotechnology called language as an easy to use substitute.

Emerging Neuro-Ethics

BCI technology that tranlates thoughts into language surely are a literal lifesaver for paralyzed people and those with disabilities, but they also have applications that are problematic. Already, patients who received bionic eye implants were left in the cold by companies who shut down technical support for those devices — they literally went blind because some company decided that their electronic retinal models didn’t generate enough profits. A patient with crippling cluster headaches was left in agony when a startup developing implants shut down. I’m very sure we don’t want that with brain activity.

We also can manipulate the neural makeup of soldiers in the battlefield with drugs and the Nazis did so using amphetamine. With BCI, we should be able to turn off whole brain regions, creating fearless supersoldiers who control drones with thoughts. What can possibly go wrong, no question mark.

And machine telepathy opens it’s own whole can of worms of privacy concerns: What happens to your neural data? Do you own it? Where is it stored? Will companies use it to train AI-models of other people than me? Will AI-models trained on my thoughts contain stuff that i don’t under no circumstances want public? Will an AI-thought converter hallucinate things i never dreamed up? Will companies be able to sell targeted advertising based on this neural data? Will companies be able to sell targeted advertising based on neural data that may contain stuff I don’t under no circumstances want public? Will machine telepathy out me as a trans person? Will machine telepathy tell my crush I’m totally into her when I’m a married guy in my fifties and she’s thirty years younger and my boss at work? Will machine telepathy tell my wife? Will machine telepathy working with AI-converters generate stuff that may please my brain for some reason, but that will look totally horrible to yours? Will neurohackers be able to plant memes into my brain? Will we have neural firewalls and will they constrain my thought process?

We have no idea.

The ethics of BCI-tech is an emerging field that has yet to find wider recognition. A first ”Declaration on the ethics of brain–computer interfaces and augment intelligence” outlined first elementary talking points ranging from privacy to autonomy of decision making to accountability of corporations.

Technology up to this point always was an external extension of our body and cognitive functions. BCI combined with Artificial Intelligence as a neuroconverter has the potential to not just be an external extension enhancing our body functions, but transforming those functions while they are formed inside our minds. This is why i think especially with this tech, maybe even more than with AI, we should be wary of unforeseen consequences and tread very, very lightly.

So, congrats to Neuralink for this milestone. I’ll watch this space closely while we move forward.

Fascinating. Thank you for this very interesting piece. 🙏