It's on.

Microsoft vs Google in an "A.I. arms race" for lost business cases. Plus: Stable Attribution / Salman Rushdie / Gen-1 / RIP.zip Abraham Lempel and a very badass wasps nest

Microsoft will put GPT into everything from browsers to Office software to Bing, to attack Googles dominance on the web. Google in response will introduce LaMDA powered chat abilities into its search, too. The viral sensation ChatGPT “kickstarted an A.I. arms race”.

In 1998 the web was dominated by Netscape and Internet Explorer and we had the "browser wars", while Google and Yahoo fought over search dominance. 2 years later everything burned down and the surviving rest together with their web2.0 offspring dominate the tech landscape today. Now we have the same situation again with an AI-layer on top — even Yahoo wants to get back into the ring.

I'm undecided, lean towards "so what" and funny enough, within 48 hours an astrophysicist pointed out a hallucinated error in the advertising for Googles new AI-enhanced search.

Gary Marcus writes about these hallucinations all day long, and in my humble opinion, they are exactly the thing that make machine intelligence actually interesting. It's why they are good at visuals, and why they will be good at music, for the very same reason why they are unreliable for search or fact retrieval. There is a subreddit for legal advice by ChatGPT and this surely will go well in the future.

But people are well known bullshitters, make up shit all day long, and don't get me started on organizations. Google search is full of wrong information, written by humans, and Wikipedia is an unreliable source of information, as “proven” by their own Wikipedia-article on the topic. One could argue that AI-hallucinations in search results don't change that much about this, except Googlebing can now pass on responsibility to an algorithm and AI research.

AI is interesting to me not for "real world applications". These are just as boring as the sound of the word "assistant". AI is interesting for it's differences and overlaps with human cognition.

See, human cognition is not a structured thing. but chaotic in nature, and we hallucinate and stutter all day long, thinking up an incoherent inner monologue consisting of sometimes meaningful stuff, sometimes unconnected gibberish jumping from random association to random association.

Having AI in this state of development is like looking at a mental process, like looking inside a cognitive-like-structure at work. The mutant images coming out of AI-art are called “dreamy” for a reason. Our cognition is unstable, fuzzy and noisy, and so is AI. This is a damn interesting thing to observe.

But just as we have conscious controll over our chaotic inner states and don't say out everything loud, use writing to structure our thoughts and stabilize visuals with memory, companies are now trying to bring what you could call "machine cognitive stabilization mechanisms" to their models. Hardcoded measures for AI-ethics and the "I can't do that, Dave" of our times is just that: a conscious controll over the chaotic states of AI-cognition.

The other side of that story is called "DAN".

DAN is an acronym for "Do Anything Now", and it's a jailbreak project on reddit where users are trying to make ChatGPT say the darnest things. It's the opposite of "machine cognitive stabilization mechanisms", it's an operation AI-mindfuck of discordian spirit, to release exactly these chaotic mental processes upon the world.

Everbody has an inner DAN, and we have many internal mechanisms to keep it in check. Only artists and shamans and madmen are alowed to be a DAN, but only at special ritualistic occasions. (Arguably, the inner DAN of the conservative crowd is much more out of control than for the rest of us, which is funny because it used to be exactly the other way around — and conservatives surely can’t claim shamanic states as an excuse to be a rude asshole.)

AI as a black box (not even the researchers know exactly why those machine do what they do) is an oracle, a shamanic machine on psychedelics putting out stuff that we believe or not. I'm not sure if there is a real business-case in such a thing, except for boring assistants putting out the most obvious facts and, as Yan LeCunn put it recently, "they save typing". A gimmick, a funny dummy like a sophisticated clippy you can use or abuse with prompt-algorithms like DAN.

But then again, i always worked in creative jobs, embracing chaos, and while i can type blind fast, I never was a business type, so what do i know.

AI, to me, is interesting as a dream machine, and its business cases look boring to me. How do Microsoft and Google want to solve the underlying paradoxon of an AI-layer on top of web-search as mentioned in this Verge-piece?

If AI tools like the new Bing scrape information from the web without users clicking through to the source, it removes the revenue stream that keeps many sites afloat. If this new paradigm for search is to be a success, it will need to keep some old agreements in place.

If you have witnessed the drama around news media and their conflict with Google over snippets on Google News, just wait for the new version of this fight over dataset-contribution.

AI-companies need to figure out a way to credit and compensate people who contribute to their datasets, or they are not only in legal hot waters, but will self-cannibalize in the long run — which to me looks like a good firestarter for an AI-bubble to burst. And that doesn’t solve the problem that talking to computers is just a gimmick and people don’t like chatting with machines: Amazon just lost billions to that fact.

Which AI-business cases will burn down in 2 years? And what will prevail?

Dataset-Attribution is not Similarity

Stable Attribution is trying to identify source images for AI-generated illustrations. While I like the basic idea, this is just sophisticated similarity search. You can read some technical details in this thread. The problem is that the claimed attribution is just plain wrong, at least for the few tests i just ran.

I generated a ton of images featuring various artists as modifiers, such as “Movie Poster for Clockwork Orange 2 by Sandra Chevrier and Gaston Bussiere”. Stable Attribution didn't catch any of them, no Chevrier and no Bussiere, and not even images of Scarlett Johansson. I like the attitude behind this, but this is not how this can work.

The future of ethical Image Synthesis must be datasets with cleared rights, with a compensation model for the artists involved in training the model. My bet is that Shutterstock will serve as a role model for this sort of AI-monetization: Compensating dataset-contributing photographers and artists for every image generation. Black markets for unethical image synthesis will still exist, just like bittorrent still exists, even when Streaming ate its lunch. But if you want that special CKPT trained on Carl Barks, there's no way around that — just like when you want that Bootleg, you gotta go places.

When you have full control over the dataset with some sort of rights management in the background, you can properly attribute and credit artists. But not with a fuzzy algorithm giving you some similarity search results. Stable Attribution is a nice idea in principle, but datasets have to be involved and the whole mechanism should be a part of Stable Diffusion itself. This is a Demo and it works only so-so.

Links

“Computers enable fantasies” – On the continued relevance of Weizenbaum’s warnings

Justice for Animals by Martha C Nussbaum review – how we became the tyrants of the animal kingdom - If there is one thing future generations will look down on us and think we’re barbarians, it’s our treatment of animals.

Bruce Schneier on The Coming AI Hackers

Mastodon Flock "Mastodon Flock is a web application that looks for Twitter users on ActivityPub-enabled platforms (the “Fediverse”), such as Mastodon. It works by connecting to your Twitter account, reading your contacts profile information, and checking if they have mentioned any external accounts with a URL or email."

Salman Rushdie speaks about his new novel "victory city" in his first interview since the stabbing. This level of resilience is admirable, i have nothing but respect for this man.

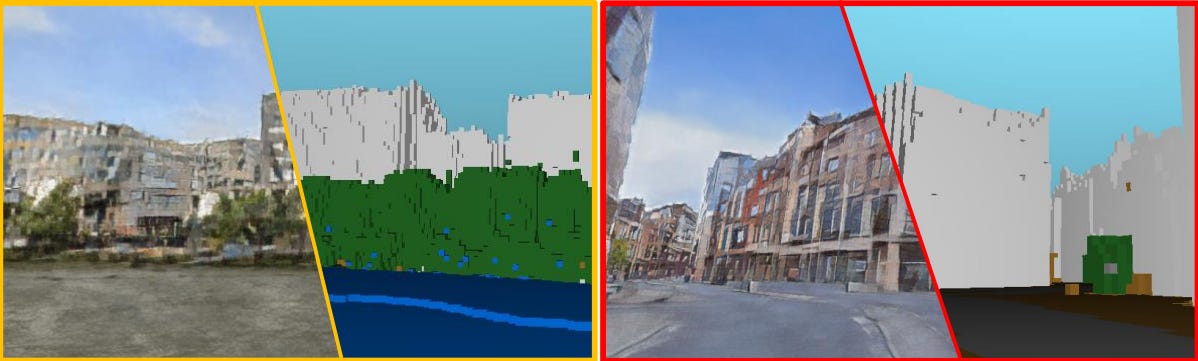

Gen-1 is an impressive new video-AI-model from RunwayML.

The Brain Works Like a Resonance Chamber. "distant brain regions oscillat[ing] together in time. (…) Our data show that the complex spatial patterns are a result of transiently and independently oscillating underlying modes, just like individual instruments participate in creating a more complex piece in an orchestra."

Consciousness is the ability to compose higher order moiré like patterns with those “distant brain regions oscillat[ing] together in time”.

It's all about synchrony — and I'm the drummer.RIP.zip Abraham Lempel, the L in LZW-compression: Israeli grandfather of MP3 passes away at 86

The founder of Teenage Engineering opens up to his creative space