Of Mermaids, Editology, and AI

Life is too short to be outraged at the problematic instance of Avengers Infinity Wars seed 3273567.

About Edit Wars

So there was a racist on Reddit who whitewashed Disneys black mermaid with AI-technology. Ryan Roderick has a piece about this "Logical Endpoint Of Toxic Fandom" and while i don't like several things about this piece, none of them take away the fact that there are people who actually complain about the skincolor of a mythological archetype that is available to all people by default. I don't know if "pushing the losers" will help very much, but I want to add some points about the bigger picture at work here.

For some years now I use the term "editology" to describe our digital envoriments. With this I mean that this technology enables us to edit what we perceive by others and how we are perceived by them in return. And it used to be that you had to be skillful to edit the digital. Back in the day, you literally needed punchcards and transistors to edit the digital, later you could use some form of language to do this and we called these languages "Assembler" or "Basic" or "Fortran". Then we invented Graphical User Interfaces to talk to machines and edit the digital, back when communication with other people behind machines was sparse.

Today, with a click of a mouse, I can send a message around the world potentially seen by millions in which I pretend to be a dog, and I can produce a sweet totally unreal photo featuring a golden retreiver on a bike that goes along with it. I can record a Podcast in the fake voice of a talking dog and create a whole Twitter feed for the amusement of my friends. This is not only possible with dogs, but also with every person alive. Tomorrow, I will be able to do this in video and movies and all the media you can think off. I will be able to pretend to be anything I want, and create anything I can imagine, and all I need is a prompt which tell's Dall-E v10 to create this or that timeline of a person from a parallel universe, including all fake memorabilia. This turns our media environment, through which we commmunicate and play and inform and entertain ourselves into a liquid substance which we can form into whatever we like, including black and asian and latino and, yes, white mermaids too.

We're already living with some of the side effects of editology: the completely outlandish fantasy worlds merging with reality in the form of weird conspiracy theories, which at their beginnings are empty canvases ready for the gamified race for the most crazy stuff you can come up with. Or the people having cosmetic surgery to look more like photo filters, or the rising mental health issues among young girls who compare themselves to fake Instagram feeds, or the epistemic distortions in which we value the DIY-knowledge-production ("Do your own research") more than that of established experts.

All of this is based on the hyper customization made possible with digital tools, editology is the basis for all of those phenomena.

We made our symbolic world editable and packed it into a pocket computer for everyone to play with, and now we wonder that people create reactionary mermaid-versions with it. The editological principle of mermaids states that all mermaids exist in all variants in latent space, and somebody will find them and edit them into variations of Disney-movies. Editology also means we're having a fight over the logos, about which version of those mermaids represents truth. That's roughly 9 billion truths in the RAM of our pocket computers and laptops fighting about which one is more authentic than the others, more zeitgeist, or more just. These are a lot of fighting plato caves. Too many for my taste.

And here's the news: This is, still, just the beginning. Imagine that editological technology can not only whitewash identitarian corporate business-decisions in modern myth making, but also dreams in technologically connected consciousnesses, which sounds very far out until you read a paper on neuroprivacy.

This "debate" about a mermaid for me is much simpler than that: As stated in the first paragraph, the mermaid is a mythological archetype and as such she is available to all humans by default. Until now, its visual depiction only represented a small powerful minority of humans, and we're changing this now, which is a good thing. More people can hold a beloved archetypical mythical creature more closely to their hearts. Changing this back, because you can, is mean spirited and stupid. But for what it's worth: I don't think that stupid is newsworthy.

We're building a machine that can manifest dreams in audiovisual media, and those dreams include that of racists. We can be outraged at every instance of this, after all, racism sucks. But I also think that life is too short to be outraged at the problematic instance of Avengers Infinity Wars seed 3273567. These things will happen, because AI in combination with editology means that we will have everything, everywhere, all at once.

Deepfake-Elvis at America Got Talent

Elvis Presley performed live on stage two days ago at the finale of Americas Got Talent, together with some minor celebrities nobody cares about. Elvis Presley, as you might have guessed, was not a real human being because the real Elvis is still alive and well and drinks Shasta Black Cherry at a bar in Honolulu right now, chuckling about his deepfake twin. Anyways.

The Elvis at the ATG-finale was possible the biggest live performance by an artificial human made with deepfake technology. The holographic ABBA performing at the Wembley Stadium played in front of an audience of roughly 100k people. The artificial Elvis was seen by at least 6 million people.

(Technological sidenote: Please note how the actual performer on stage is not moving his head very much and keeps it in place. This is to make sure the Elvis-Illusion stays undistorted without visible artifacts and his face is always recognized by the algorithms in the live performance. While the technological outlook is mindblowing, it’s also still shaky in the details. First rule of AI-imagery: Don’t look closer.)

The performance was done by Metaphysic, a company that specializes in artificial humans and you might know them from the viral Deep Tom Cruise who is actually Miles Fisher, who wrote about his adventures in deepfakery for the Hollywood Reporter recently and is a cool motherfucker just for making this brillant American Psycho Spoof and this fun promo for Final Destination 5 which I blogged about 13 years ago.

You can read more about Metaphysic at the Nvidia-Blog.

However. An artificial Elvis Presley and a deepfake company performing at the finale of Americas Got Talent marks the latest step in the mainstreamification of synthetic media and artificial intelligence. Many, many more people are aware of this tech since when I started writing about it since 2015.

And while this Elvis is only a nice gimmik, its also a sign of times where the boundaries of reality and fiction get blurry and we enter an age where we can manifest anything we can imagine. This might turn out to be a bittersweet superpower.

But until we figure out what this technology even is that we are creating, it's perfectly fine to shake a leg.

Heaven knows how you lied to me,

You're not the way you seemed,

You're the devil in disguise.

Gene Wolfe AI’d

Wolfe’s Arcana. Or: Midjourney, Meet Gene Wolfe : “I think Gene would be totally tickled by the idea of a computer iterating the greater trumps, based entirely on his poem ‘The Computer Iterates the Greater Trumps.’”

The Hanged Man hangs by his feet, Knew you that? His face, so sweet, Almost a boy’s. He hangs to bleed. Who waits to eat?

Killing In The Name Performed By The North Korean Military Chorus

Little Planet Wall of Death

I love Walls of Death for some reasons. I like driving as such, and I like loops, and I like distorted perspectives. A Wall of Death has all three and its a form of stunt driving, so what’s not to love? Having said that: Using little planet photoediting to create a panoramic wall of death is a brillant VFX idea.

(Screenshot taken from this musicvideo for the song "Eggshells" by Dehd, which I’ll feature sometime today in my musicvideo-sideblog GOOD MUSIC) .

New tools for artists to manage AI training data

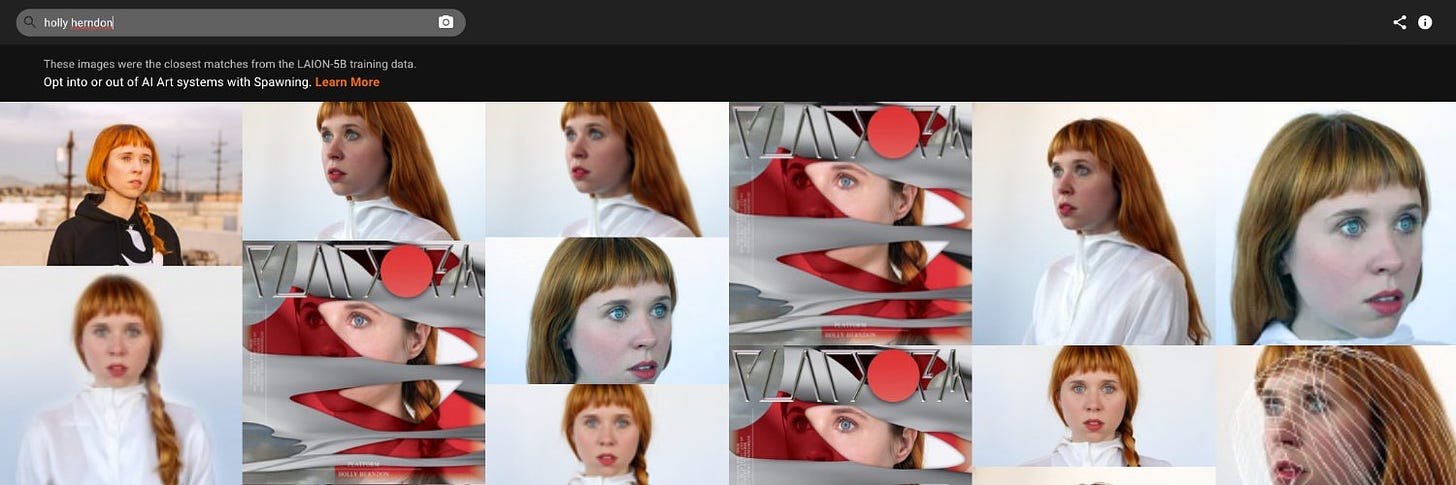

Holly Herndon, who you might know from her AI-deepfake-artpiece Holly+, in which she sells shares of her recreated AI-voice, and Mat Dryhurst, introduce Spawning, an organization that is “building tools for artist ownership of their training data, allowing them to opt into or opt out of the training of large AI models, set permissions on how their style and likeness is used, and offer their own models to the public.”

haveibeentrained.com is a search engine in which artists can find out if their works are part of training data used by image generators like Dall-E or Stable Diffusion and use it to opt-in and out. I don’t know how they want to enforce possible opt-outs or if those opt-outs have legal merit. But it’s a nice first step into the right direction.

From their FAQ:

Copyright is an outdated system that is a bad fit for the AI era.

A new era offers us the opportunity to reconfigure how we treat IP! We believe that the best path forward is to offer individual artists tools to manage their style and likenesses, and determine their own comfort level with a changing technological landscape.

We are not focussed on chasing down individuals for experimenting with the work of others. Our concern is less with artists having fun, rather with industrial scale usage of artist training data.

Online Art Communities Begin Banning AI-Generated Images, here’s a good comment on this on Hacker News.

Hacker News Guidelines, explained in Victorian English with GPT-3

"AI image synthesis is inconsistent and can't be used for coherent storytelling required for media like film or comics" Technology: Hold my beer. StoryDALL-E: Adapting Pretrained Text-to-Image Transformers for Story Continuation.

Diablo, A Direct-Drive Wheeled-Leg Robot

Douglas Rushkoff turned his bizarre 2018 meeting with the super rich in which they talked about their prepper plans to escape some apocalypse into a book. Survival of the richest is available now, here’s an excerpt in The Guardian: The super-rich ‘preppers’ planning to save themselves from the apocalypse.

How to nurture a personal library

Bestselling authors explain how they organize their bookshelves and what's on them

The joy of crime fiction: authors from Lee Child to Paula Hawkins pick their favourite books

First Batch of Color Fonts Arrives on Google Fonts