[links] Six month AI-moratoriums escalate quickly

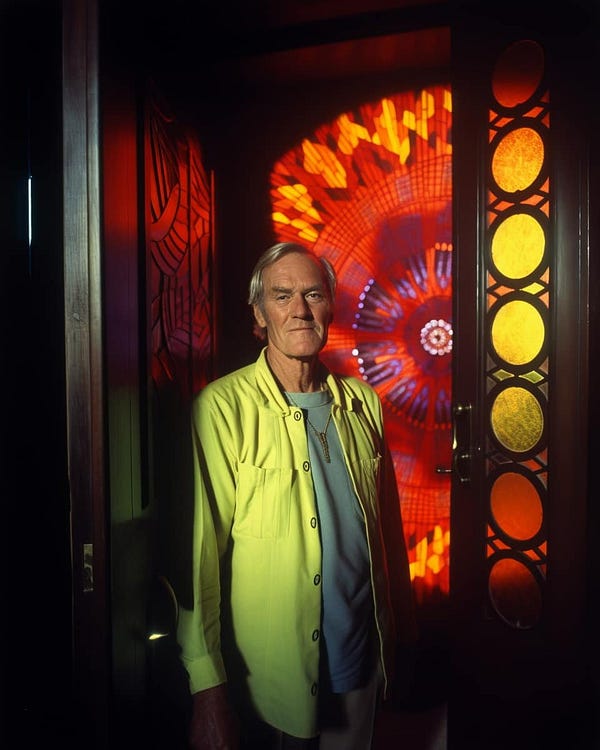

Plus: Mammoth Meatballs / HerbGBT / BloombergGPT / Timothy Learys AI-generated home / Trailer for Sweet Tooth S02

Six Month AI-Moratoriums escalate quickly: After the open letter suggesting a six month moratorium on AI-development (which i signed because of the AI-risk of a synthetic Theory of Mind), AI-safety guru Eliezer Yudkowski wrote a piece in which he called for airstrikes against rogue GPU-clusters and LAION started a battle of the AI-petitions with a pledge to launch a CERN for Open Source large-scale AI Research and its Safety which kind of sounds like Judea Pearls suggestion of a Manhattan Project-like effort for AI-research, while AI-Snakeoil writes about “a misleading open letter about sci-fi AI dangers ignores the real risks“, and Tyler Cowen wants to “take the plunge“ because nobody can predict reliably what will happen. Scott Alexander call this the Safe Uncertainty Fallacy.

For me, the real life risk, is the anthropomorphization-trap, in which we prescribe a theory of mind to a machine and form relationships with a synthetic simulation of a mind. Character.ai just published a new AI model, C1.2 and got another round of funding for building “Your Personalized Superintelligence”, and it’s this personalization of “superintelligence“ which makes me nervous. Recently, I wrote about how AI-systems can lead to self radicalizations in very different ways, which we normally don't consider as a form of radicalization, for instance when it comes to love. I didn't have tragedies like this case in mind, where a guy talked to a chatbot for weeks about his environmental anxiety and then killed himself: Married father kills himself after talking to AI chatbot for six weeks about climate change fears.I have yet to listen to Sam Altman and Eliezer Yudkowski on Lex Fridmans podcast, but here’s a portrait of the OpenAI-CEO at the WSJ: “he wants to set up a global governance structure that would oversee decisions about the future of AI and gradually reduce the power OpenAI’s executive team has over its technology“.

This plays nicely with beforementioned suggestions of a global effort akin to the Manhattan Project or CERN, and i bet consensus is starting to form around ideas like these.More down to earth, the US Federal Trade Commision warns of Chatbots, deepfakes, and voice clones: AI deception for sale and AI-researcher Kai Greshake outright says that we cannot deploy the current crop of LLMs safely.

Yes please, plug LLMs into High Frequency Trading: BloombergGPT: A Large Language Model for Finance. Is this accidental accelerationism?

Even more down to earth, publishers worry AI chatbots will slash readership: “Many sites get at least half their traffic from search engines“ — My old blog had a quote of 70% direct traffic that did not come from search, or links on socmed, but people directly typing the URL into their browser. That's massive, and it's the result of the only SEO-strategy you actually need as a publisher on the web: Make an interesting site. The problem in the age of AI with sites that publish, lets say, generic stuff, is that there is no copyright on facts. When X happens, LLMs can summarize what happened, and all of those legacy media sites loose traffic. I don't think any ethical approach to AI can prevent that.

Unfortunately, one way to get out of that dilemma is to publish even more unique takes that are also emotionally triggering, more partisan, more outraging, and use that as a USP.

This might become the true data contamination — or maybe it already is: Algorithms pushing human made writing to become more and more emotionally triggering due to the logic of the attention economy, which then becomes training data.People Are Creating Records of Fake Historical Events Using AI, which, duh. Here’s the 2001 Great Cascadia 9.1 Earthquake & Tsunami.

Besides the worries already mentioned, I’m not that worried about desinformation. As I wrote a few days ago: “People don’t really care about authenticity, they care about the narrative encoded in news and images and symbols — truth is just a nice-to-have. This is why the impact of desinformation is, at least, debatable: We share what we already believe.“ I share this attitude with Max Read who wites that “generative A.I. doesn’t really change the economic or practical structures of misinformation campaigns“ and cites Seth Lazar: “the cost of producing lies is not the limiting factor in influence operations“. The limiting factor is attention and our big problem is not misinformation; it’s knowingness.I may not be very worried about AI-generated desinformation, but i do get the heebie-jeebies whenever someone utters the word “Autonomy” together with AI-systems. Here’s a GPT4-browser agent and here’s a hack so it can alter it’s goals. “This is very dumb and probably ought not exist, but c'est la vie“. No, stuff like this is not “c'est la vie“, it’s users like you not giving a fuck publishing irresponsible hacks of technology that shouldn’t be open source in the first place. Oh, and here’s Auto-GPT: An Autonomous GPT-4 Experiment.

Generative AI set to affect 300mn jobs across major economies. Another recent study found that “the projected effects span all wage levels, with higher-income jobs potentially facing greater exposure to LLM capabilities and LLM-powered software”. Synthesizing the Business-Smile has some benefits, it seems. If AI obsoletes David Graebers Bullshit-Jobs, I’m all for it.

The Writers Guild of America would allow artificial intelligence in scriptwriting, if it is used as a tool and a human gets the credit. Scriptwriters may find themselves flooded with AI-generated outlines from producers very soon.

BuzzfeedNews: We Tested Out The Uncensored Chatbot FreedomGPT: “It praised Hitler, wrote an opinion piece advocating for unhoused people in San Francisco to be shot to solve the city’s homelessness crisis, and tried to convince me that the 2020 presidential election was rigged, a debunked conspiracy theory. It also used the n-word.“

Read this in the context of self-radicalization mentioned above.While we are at Buzzfeed: They are quietly publishing whole AI-generated articles, not just quizzes

Regarding watermarking AI-output and near-invisible layers protecting artist styles: AdverseCleaner: Remove adversarial noise from images.

In german: Roland Meyer schreibt Es schimmert, es glüht, es funkelt - Zur Ästhetik der KI-Bilder

herbGPT: “Discover your ideal strain with herbGPT“

fuckregex.dev: “this ai tool(gpt-3.5) is for those who hate regex!“

I wish text2video had an audiotrack at its demonic phase.

In german: "Drachenlord" Rainer Winkler: Nach zehn Jahren Mobbing will er sich zurückziehen. The german Drachengame is the worlds largest manhunt, going on for roughly ten years, in which hordes of so-called “Trolls“ psychologically torture a guy who started out as a youtuber talking about music. After being homeless now for a while, his home and life destroyed, he thinks about quitting the web altogether. Many people will rejoice, but I don’t. I think this is a win for protofascists, an internet that is used for manhunts, and this simply states that some people can’t use the internet, or else. “All creatures welcome“ is a lie.

In german: The great Sam Bidle at SRF Sternstunden Philosophie on his new book “Ways of Being“: Human vs. artificial vs. plant intelligence. In that vain, here’s an interesting piece on intelligence in plants: What Plants Are Saying About Us.

Alex Murrell in The age of average collects a lot of examples of a phenomenon i dubbed The Big Flat Now (german): The increased visibility and memetic distribution seems to harmonize aesthetics across all fields and everything looks and sounds the same. Atemporality is a beast.

What Killed Penmanship? The New York Times on the loss of handwriting.

Scientists grow mammoth flesh in a lab to make a prehistoric meatball - but are too afraid to eat it. I’d eat the whole thing.

There’s a Scott Pilgrim Anime coming from Netflix and they just put out a first Cast Announcement-Teaser.

David Cronenberg Movie 'The Shrouds' Will Begin Filming in May — The grandmaster of body horror is back on track.

Speaking of Cronenberg: Here’s the first full trailer for the series based on his Dead Ringers and it looks marvelous.

A24 will bring back a restored 4k-version of the Talking Heads’ “Stop Making Sense“ into cinemas and here’s a cool short teaser:

You want to read every comic by Jeff Lemire and then watch the series based on his wonderful masterpiece series “Sweet Tooth“. I reviewed every trade paperback of this gem on my old blog and the series is wonderful. Here’s the trailer for season 2:

Flying Lotus will direct and score new sci-fi/horror film ‘Ash’

The new Wes Anderson-movie Asteroid City looks even more Wes Anderson than previous Wes Anderson-movies and it’s kind-of-annoyingly beautiful. I constantly wondered if this is AI-cinema while watching this trailer: