Yesterday, after a marathon session, the EU agreed on the outlines of the "AI Act" and adopted the framework for regulation of Artificial Intelligence. In summary, the AI Act mandates transparency of training data and protection of copyrights, as well as strict regulations for more risky applications of AI in critical infrastructure, policing and authorities, and the use of biometric data. While it is not the world's first AI law, as claimed by Ursula von der Leyen on TwiX (China enacted its AI rules in August), it is certainly the most comprehensive. Details about the AI Act can be found on the European Council's website.

But this post is not really about the AI Act.

In his half-hour presentation at the CogX Festival in London a few weeks ago, Stephen Fry took a few steps back and aimed at the big picture: How can the upcoming wave of technological progress, which will not stop at current developments like the Big Data-based and algorithmically generated statistical models with their remarkable capabilities and shortcomings—i.e., current AI systems— but with increasing technological capabilities in computing lead to further acceleration of innovation: Today, we contemplate an epistemic crisis of world knowledge in the era of Deepfakes, surveillance, and AI-driven bioweapons, while on the horizon, Brain-Computer Interfaces and quantum computers are already emerging. Simultaneously, the most outstanding of all crises, climate change, is looming, showing its first effects for several years and setting new records for steadily rising emissions. In short, how can we ensure that the upcoming real-life version of what is commonly referred to as the "Singularity" turns out for the benefit of humanity?

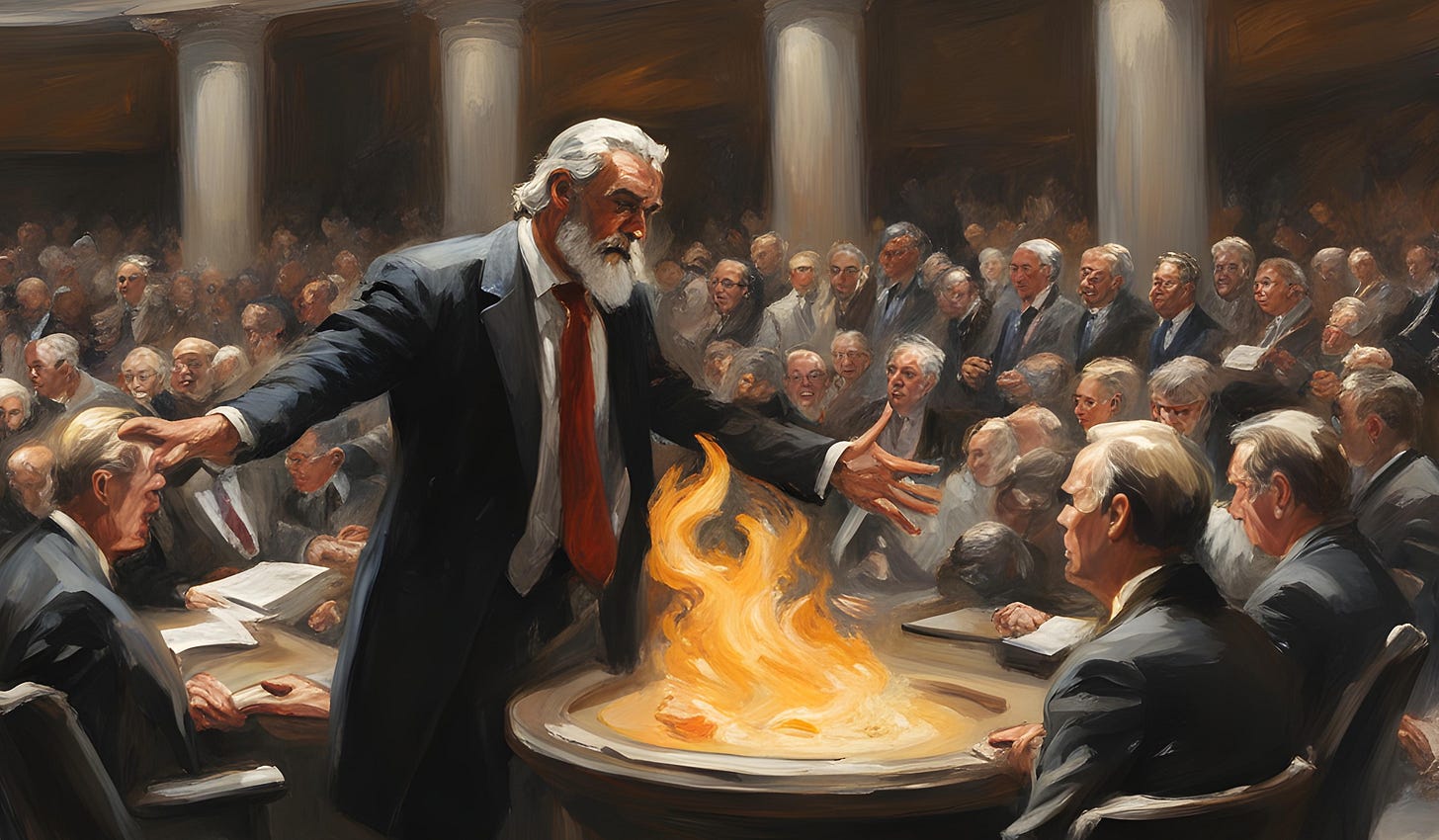

Fry doesn't provide a conclusive answer. Instead, he tells the well-known tale of Prometheus from Greek mythology, in which the Greek god stole the beneficial fire from Zeus and gave it to humans, only to be chained to a rock, where vultures ate his liver for eternity, and Zeus sent Pandora's box to Earth as punishment, bringing all the evils among humans until only hope remained.

Fry presents us with the choice of whether we want to be the fire-giving god Prometheus, giving humans the spark of artificial intelligence, or the father of gods, Zeus, with strict regulations in the face of the upcoming, especially AI-based technological leaps that are emerging: Deep learning algorithms recently computed 2.2 million new crystal structures, "800 years’ worth of knowledge," which can be used for novel technologies and innovations, such as in the production of solar panels. Another study confirms the abilities of large language models in the discovery of new molecules for pharmaceutical research, promising new and (possibly) cheaper drugs. A study from July on the acceleration of scientific research with AI found that artificial intelligence systems explicitly trained on human interferences in the research process — those rare Einsteins proposing completely novel theories — increased the prediction of these AI systems for future discoveries by 400%.

These things are possible today.

I myself am an advocate of slowing down development (mostly for psychological reasons) and strong AI regulation, as outlined in the EU Act, i guess i’d choose Zeus and chain Prometheus against the rock, but I’d spare him the liver eating vultures because come on.

In Against open sourcing Automatized Knowledge Interpolators, I argued against open-source AI a few weeks ago: AI systems can cause the scientific leaps listed above because they can interpolate their training data comprehensively at an unprecedented speed. You train an AI on known crystal structures and out come a few million new crystals within a few hours. You change the algorithm and get 40,000 new chemical weapons.

This fundamentally new, non-human power of interpolating known knowledge is equivalent to epistemological nuclear fusion. Strict regulation of this epistemological power to me seems necessary, and the techno-traditional approaches of free open-source development appear to be at least somewhat dangerous here.

I also don't think that we only have the choice between Prometheus and Zeus.

The AI spark that Stephen Fry talks about in his presentation is, in fact, and primarily, Big Data, as all AI models are based on excessive amounts of training data. And precisely those have now been regulated by the EU, which insists on data transparency and quality. In Stephen Fry's words, the EU insists that Prometheus' gift of fire is indeed a properly controlled flame, and not a flickering, uncontrollable wildfire.

The EU's AI Regulation is an update of the ancient greek myth of Prometheus in a unifying legal act: In this version, Zeus gives his flame to Prometheus himself but does so insisting on controlled sparks, so it doesn’t set villages on fire, and on transparent fuel procurement so the flames don’t ruthlessly exploit the firewood of the people.

I can, for now, live well with this new, freely adapted, unified Stephen-Fryan AI mythology of the EU.

Ai agree

find it hard to believe in competence especially in the techno/digital foresight of the EU leadership. but not that hard to hope:)