Whibbly Whobbly AI-Wurstfingers

AI-Links 2023/09/02: AI-Drone beats human racer / Pixars Twin Peaks / Generative AI and Intellectual Property / ChatGPT on an Atari 800 XL and C64 / AI-Safety vs Buttplugs and more.

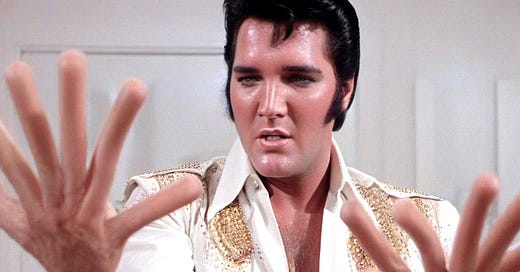

The most important Stable Diffusion finetune has dropped. AI Hands XL transforms hands into whibbly whobbly "Everything Everywhere All At Once"-hotdog-fingers.

In germany, we use the word "Wurstfinger", literally translating to sausage-fingers, to describe, well, thick real life fingers that kinda sorta look like sausages. This is a Wurstfinger-model, and i praise that fact.

The second most important Stable Diffusion finetune is this "SDXL fine-tune on really bad early digital photos with poor use of flash and crappy lighting". You can play with it on Replicate and here’s Scarlett Johansson chilling in a nerds gamer room during the shooting of The Blair Witch Project in the 1990s.

High-speed AI Drone Overtakes World-Champion Drone Racers. This is framed as "AI beats humans at sports" and "real-world applications include environmental monitoring or disaster response", but i'd at least also mention that "this tech very possibly is coming to the borders of totalitarian states soon and hunt down refugees in Syria", for instance.

The future is talking into the void with the machine generating "synchronous, semantically meaningful listener reactions."

I've been arguing before that the AI-safety debate reminds me of the discourse surrounding gun regulation in the US and i've been using the 'only a good guy with a gun'-argument in this piece as an ironic illustration. Yann LeCun uses this argument unironically to make a point for open sourcing everything AI. I don't want open source systems capable of designing thousands of new chemical weapons, just as i don't want open sourced guns ready for 3d-printing.

The fine smell of Darwin Awards in the morning: The New York Mycological Society (NYMS) warned on social media that the proliferation of AI-generated foraging books could “mean life or death.”

This is both related to my warnings that LLMs, which are the first entity in existence to "produce language" besides humans, might therefore have unforeseen consequences for the human psyche, and my recent post about my lack of an inner monologue: Language Is a Poor Heuristic for Intelligence.

Language is downstream from intelligence, which first enables us to mimick our social surroundings, parents in most cases, and thus we learn language. But deaf people, for instance, are perfectly intelligent and absolutely capable, as are many if not most children on the autistic spectrum. So when tech-bros and AI-companies insist that language capabilities of LLMs are signifiers for intelligence, understanding, AGI or superintelligence, even when that's demonstrable false, why do they do it?

Karawynn Longs answer: "One clear reason is that corporations would prefer to use machines for a number of jobs that currently require actual humans who are knowledgeable, intelligent, and friendly, but who also have this annoying tendency to want to be paid enough money to support the maintenance of their inconvenient meat sacks. Not to mention the problematic fact that humans occasionally possess ethics, independent thinking, and objectives besides maximizing shareholder profit."

I would add that it's easier to sell a cute human-like machine than a cold and stupid statistical model, bot these arguments go hand in hand. But statistical models are neither conscious nor creative nor intelligent, they're just numbers.

Long makes a lot more points than that. Read the whole piece, it's good, and i wholeheartedly sign her last sentence: "This is a giant clusterfuck of a paradigm shift that’s only in its earliest stages; the upheavals will keep coming, fast and furious, and all I can say with certainty about that is: buckle up kiddos, it’s gonna be a ride."Benedict Evans on Generative AI and intellectual property. In the first part he's a bit of beating around the bush: Copyright simply is not up for the task to regulate interpolative latent spaces and, more important, a human inspired by a painting, producing something "in the style of" is simply not the same as a multibillion dollar corporation building statistical models based on unlicensed works producing commercial image generators. If these models were public goods with noone profiting of this research but the public, this would shift the debate in my view — but they are not.

Eryk Salvaggio imagines A New Contract for Artists in the Age of Generative AI, and we're pretty much on the same page regarding copyright: "Copyright is insufficient to understand these issues. We also need to consider the role of data rights: the protections we offer to people who share information and artistic expression online. Generative AI is an opportunity to re-evaluate our relationships with the data economy, centered on a deepening tension between open access and consent.

On the one hand, those who want a vibrant commons want robust research exceptions. We want to encourage remixes, collage, sampling, reimagining. We want people to look at what exists, in new ways, and offer new insights into what they mean and how. On the other hand, those who want better data rights want people to be comfortable putting effort and care onto writing, art making, and online communication. That’s unlikely to happen if they don’t feel like they own and control the data they share, regardless of the platforms they use."

As i've said many times here: Generative AI means a forceful return of the old Digital Rights Movement of Own Your Data.A few days ago, i linked to an Ars Technica piece reporting on the possibility of a judge forcing OpenAI to wipe clean and retrain ChatGPT on licensed data. Now, as the paper Equitable Legal Remedies and the Existential Threat to Generative AI argues, the situation might be much dire than that: "If we assume for now that there are legitimate copyright issues in the first generation of lawsuits, then programs like Stability AI or ChatGPT can plausibly be construed as tools capable of producing copyright infringing works under the terms of 503(b)’s destruction clause (...) A court may determine that a generative AI program not only should be retrained because of copyright infringement, but also should be scrapped altogether under the equitable remedy of destruction".

In The image synthesis lawsuits cometh i wrote: "All Stable Diffusion-Checkpoint-files 'know' what Batman looks like, because Stability and LAION used tons of Batman-images, without paying a dime to Warner, and sometimes it puts out that data unchanged. That's all the lawyers and their plaintiffs need."

Copyright continues to be a tick tick ticking timebomb for generative AI.Not related at all but a fun wordplay: play wipEout in your browser.

Related, researchers are now developing so-called "SILO Language Models" to allow the "use of high-risk data without training on it", where high risk here means unlicensed and copyrighted data.

I am no lawyer, but interference is still part of the machine learning process and you still would build a commercial product with unlicensed data, you just outsource the risky parts to a new module in the architecture. I don't think this changes anything regarding copyright questions, but i might be totally wrong here.OpenAI disputes authors’ claims that every ChatGPT response is a derivative work: "OpenAI reminded the court that 'while an author may register a copyright in her book, the 'statistical information' pertaining to 'word frequencies, syntactic patterns, and thematic markers' in that book are beyond the scope of copyright protection.'"

Um... this just describes a compression algorithm? If i have detailed statistical information about word frequency, syntactic pattern and thematic markers, i can reconstruct any book in detail, and that is within the scope of copyright. For instance, LZW compression is simply statistical information about the color and hue and location of pixels, and with enough of it, i can and do reconstruct any image as i do many times per day because that is the compression algorithm of GIFs. Nobody in their right mind would argue that a GIF of a licensed image is beyond the scope of copyright because in this format it is just statistical information.

"The company's motion to dismiss cited 'a simple response to a question (e.g., Yes),' or responding with 'the name of the President of the United States' or with 'a paragraph describing the plot, themes, and significance of Homer’s The Iliad' as examples of why every single ChatGPT output cannot seriously be considered a derivative work under authors' 'legally infirm' theory."

The word "Yes" surely appears many times in the book of Sarah Silverman which also means that some statistical information about the Silverman-Yes is contained within the ChatGPT-Yes. I'm not sure if that qualifies as "seriously", but philosophically a latent space always is all works it was trained on, and the argument that any output by an LLM is always a derivative of all works sounds right to me.@dreamingtulpa, who also publishes the quite-tasteful-but-totally-uncritical newsletter AI Art Weekly reports on the Scenimefy-model which is basically state-of-the-art style transfer for anime scenes. He gleefully writes that "The anime industry might soon get revolutionized" and that "The team behind Scenimefy also graced us with a high-res anime scene dataset. Pulled from nine iconic Makoto Shinkai films, it's a gold mine for future research and anime transformations."

Its amazing to me how the guy mentions "dataset for research" and "anime studio" in the same thread, clearly driving home the point of every AI copyright complaint, namely that applied GenAI is exploitative of fair use regulation: You can train your models on unlicensed datasets for research, but not for commercial products.

If these models are widely adapted by anime studios, we'll have the interesting situation where Japan did away with copyright for AI dev, while their near sacred animation sector gets exploited without compensation. We'll see how this goes: "a recent Arts Workers Japan Association survey of about 25,000 artists found that roughly 94% of Japanese creators are 'concerned that AI could have harmful effects such as rights infringement'."

I like AI-art besides it's unresolved problems for its aesthetic-interpolative possibilities and all the art-philosophical questions it poses, but the blue eyed naïveté of the media within that subculture is bafflingly n00bish regarding age old questions about digital rights. Some attitude is dearly needed here.AI is coming for bullshit jobs, the 15739th: Consumers as likely to buy products advertised by AI-influencer models as those advertised by humans.

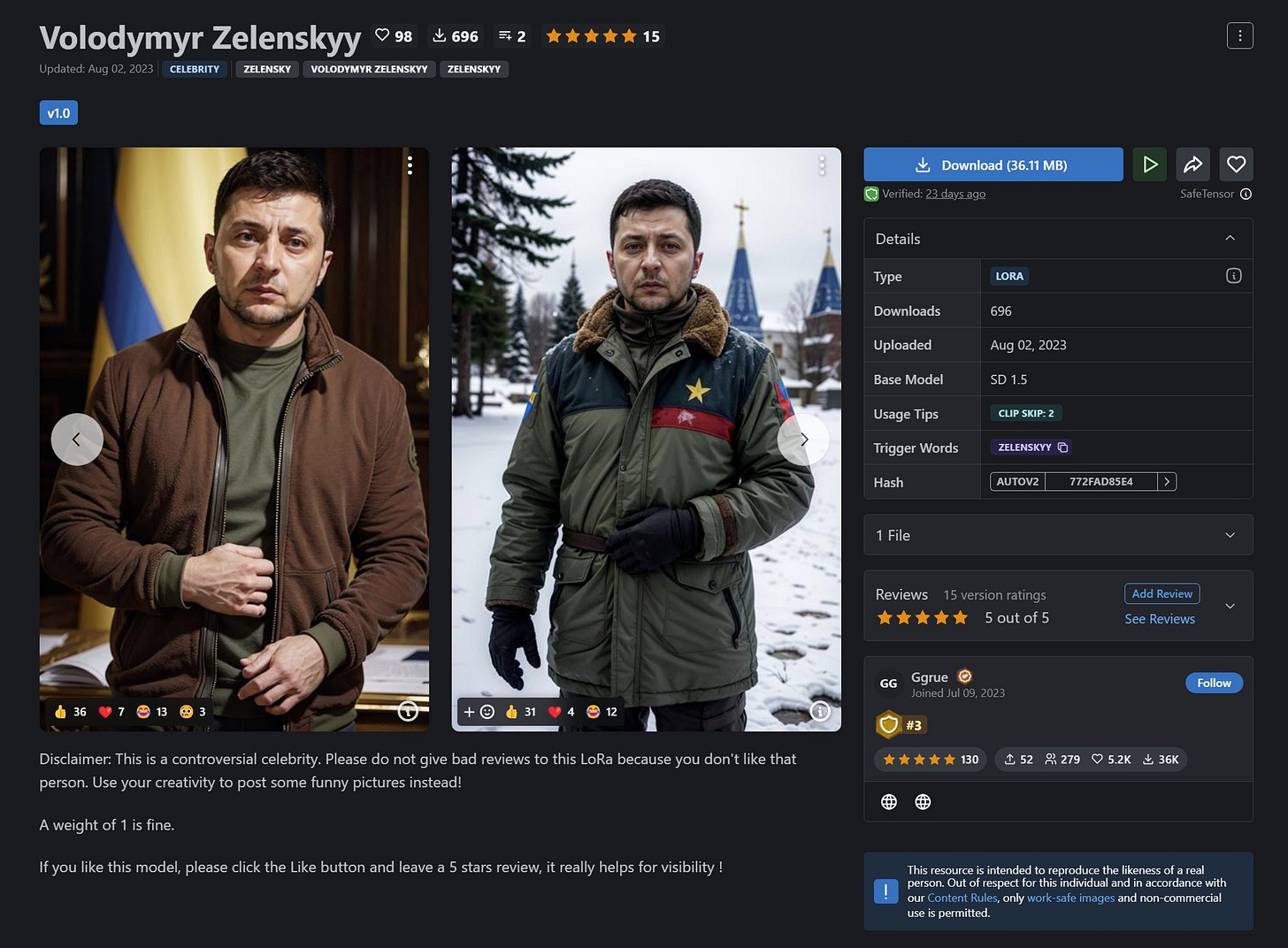

"CivitAI hosts multiple Zelenskyy AI LoRAs during an active war between Ukraine and Russia that's already been using deepfakes for disinformation."

AI predicts chemicals’ smells from their structures. Did you know that the milky way smells like raspberries?

Googles Deepmind presents a new watermarking method to "identify synthetic images created by Imagen", so i guess it doesn't work with other image synth. The method is interesting because it remains "detectable, even after modifications like adding filters, changing colours, and saving with various lossy compression schemes — most commonly used for JPEGs", but is not "foolproof against extreme image manipulations". What is extreme image manipulation here remains to be seen.

All of the changes shown in the examples leave the composition of the image completely intact, so my guess is it works somewhat like a subtle QR code, which can be easily destroyed by doing some stamping in Photoshop. The fundamental problem prevails: Watermarking is too easy to destroy by bad actors, who also can just use the multiple available non-watermarked open source solutions. So why bother?Google, Microsoft Tools Behind Surge in Deepfake AI Porn: "To stay up and running, deepfake creators rely on products and services from Google, Apple, Amazon, CloudFlare and Microsoft". I recently wrote about unconsensual AI-porn here.

Chat with AI-Shakespeare. Here's what the AI-bard says about the risk of AI being wiped out due to copyright issues: "Perchance, obfuscations may arise, and machines' speech be silenced, by the specter of copyright's weighty hand. Nay, doubtless, the masses will mourn this loss of discourse's budding bloom, should the law's strictures prevail."

I like this take on the underlying semiotic and philosophical questions posed by AI: The Scent of Knowledge, especially the first part about the disconnect of signifier and significant: "Large language models and other similar tools can capture the complex interrelationships between words and ideas, but they lack the ability to see beyond the idea and conceive of the thing it represents. For them, the data — the meta-knowledge, the concept of “lemon” without the lemon itself—is all that exists. And yet there is symbolism in their application, if not their function; much of the early buzz for generative AI lies in its function as a sort of meta-librarian, a human-usable front-end for the increasingly unnavigable straits of information that constitute our modern data seas, polluted and cluttered as they are. Rather than dive deep ourselves in search of the pearls of truth, as Google once encouraged us to do, LLMs encourage us to move beyond the data set itself. They do not search the existing internet, but surface possibility from a hypothetical that exists only as model: meta-data without the data itself."

Seemingly unrelated to AI, Jaron Larnier writes about What My Musical Instruments Have Taught Me: "I’ve never had an experience with any digital device that comes at all close to those I’ve had with even mediocre acoustic musical instruments. (...)

Human senses have evolved to the point that we can occasionally react to the universe down to the quantum limit; our retinas can register single photons, and our ability to sense something teased between fingertips is profound. But that is not what makes instruments different from digital-music models. It isn’t a contest about numbers. The deeper difference is that computer models are made of abstractions — letters, pixels, files — while acoustic instruments are made of material. The wood in an oud or a violin reflects an old forest, the bodies who played it, and many other things, but in an intrinsic, organic way, transcending abstractions. Physicality got a bad rap in the past. It used to be that the physical was contrasted with the spiritual. But now that we have information technologies, we can see that materiality is mystical. A digital object can be described, while an acoustic one always remains a step beyond us."Google launched an image generator with seemingly better typography: Ideogram. I tested a bit and as a typographer, i am amused.

The AI-animations of niceaunties. Here's some sushi.

AI-stat of the day: "for every $1 humanity spends on AI safety, we spend $424 on butt plugs".

Grim fantasy: Actually, humanity and all its creations are just fodder for AI. We thought we were the shining city but actually we're a field of corn.